Table of Contents

Final deliverables

Video

Final prototype

Process

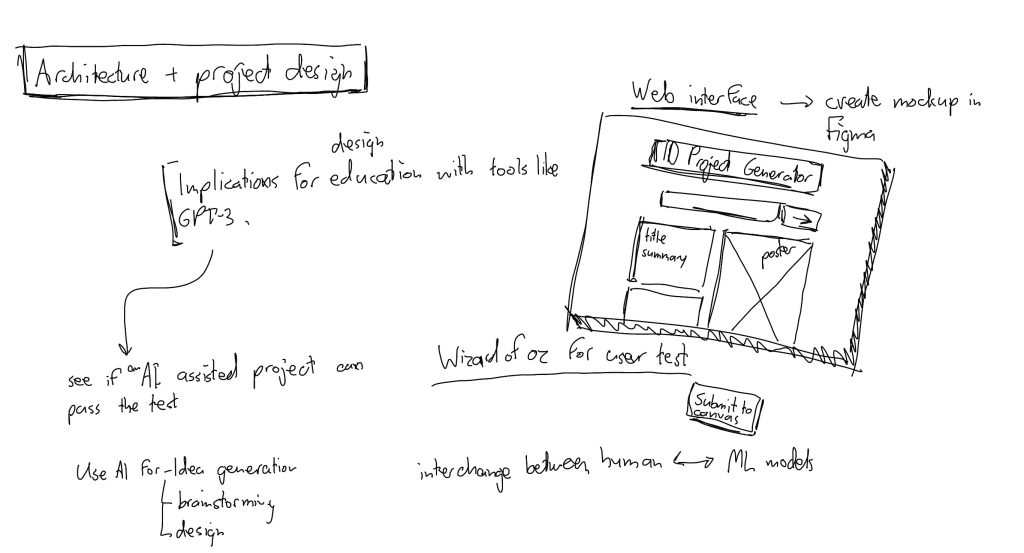

Starting point

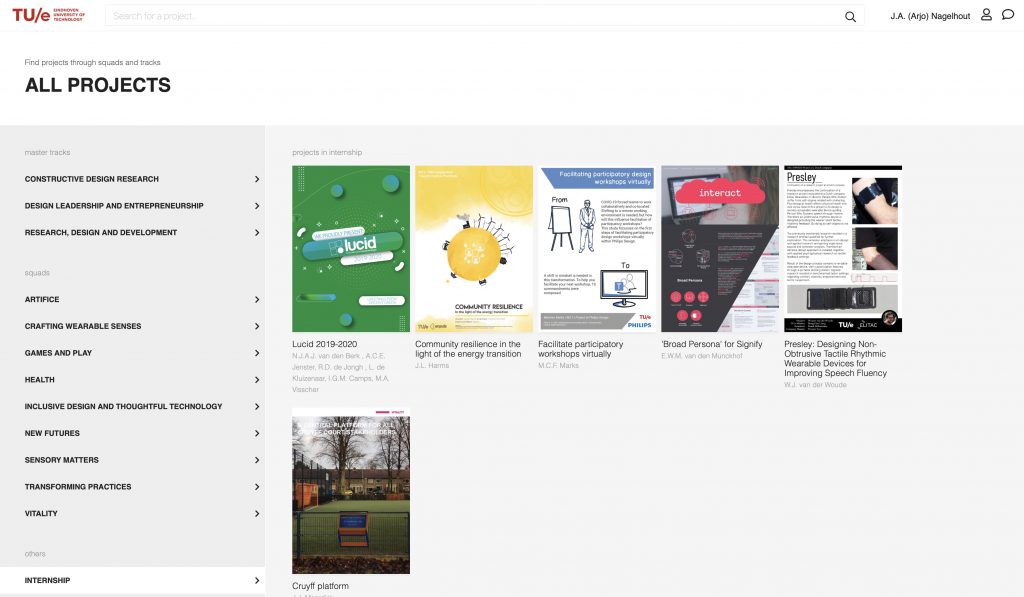

This project started out as an exploration into using machine learning to generate novel design projects from all design projects from the past three years at Industrial Design at the Eindhoven University of Technology. I use data from the demoday platform at demoday.id.tue.nl.

Data collection using web scraping

For data collection, web scraping is needed, because there is no public API for the demoday website. First I tried https://www.parsehub.com, because the data is stored behind an authentication page, and manual scraping using beautifulsoup and python would be harder.

A tutorial on how to use Parsehub: https://www.parsehub.com/blog/web-scrape-login/

Now we have a csv file with all project urls. Unfortunately, Parsehub kept crashing after this due to a weird assertion failure, but we can work with this as a start. It’s a total of 957 projects, so it will be too much to do manually.

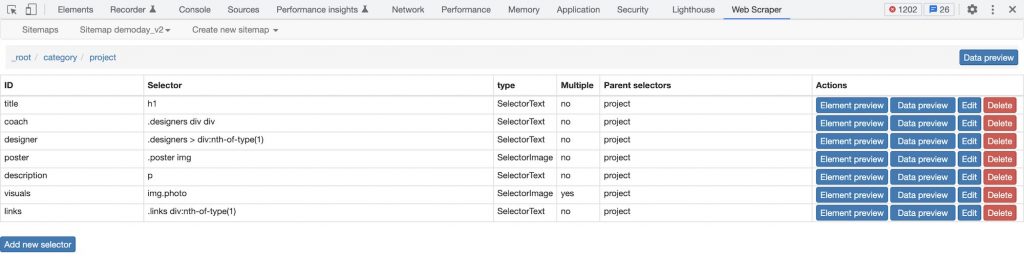

That’s why I’m using webscraper.io, which is a Chrome browser extension making it easy to scrape a web page that requires login. Documentation for the tool is available here: https://webscraper.io/documentation/scraping-a-site.

The following configuration for the Web Scraper extension was used to generate the data:

Data processing using Jupyter Notebook

Now the data needs to be processed using pandas and Jupyter Notebook. Next, the images need to be downloaded.

import urllib.request

import time

for index, row in df_posters.iterrows():

url = row['poster-src']

pathname = url.rsplit('/', 1)[1]

print(pathname)

pathname = os.path.join('poster_images', pathname)

urllib.request.urlretrieve(url, pathname)

# time.sleep(1)Machine learning model training for posters and visuals

In this section I will explore how to generate images from this sample set of (913 posters, 3072 visuals). Currently, Dall-E 2 by OpenAI and Imagen by Google are the state of the art in generating images from text input. We can use Google Colab to train machine learning models in the cloud.

I’m using the video Training NVIDIA StyleGAN2 ADA under Colab Free and Colab Pro Tricks by Jeff Heaton as a starting point. This would take over 24 hours to train on Google Colab pro to produce reasonable results

You will likely need to train for >24 hours.

What are GANs

To generate new images, we use an architecture called Generative Adversarial Networks. It works with a generator composed of an encoder and a decoder and a discriminator. For the generator, both the encoder and decoder are convolutional neural networks, but the decoder works in reverse. Here’s how it works: The encoder receives an image, encodes it into a condensed representation. The decoder takes this representation to create a new image changing the image Style.

https://www.louisbouchard.ai/how-ai-generates-new-images/

StyleGAN2-ADA PyTorch implementation

Tips for training a GAN faster

Projected GANs for faster training compared to StyleGAN2-ADA

Despite significant progress in the field training GANs from scratch is still no easy task, especially for smaller datasets. Luckily Axel Sauer and the team at the University of Tübingen came up with a Projected GAN that achieves SOTA-level FID in hours instead of days and works on even the tiniest datasets. The new training method works by utilizing a pretrained network to obtain embeddings for real and fake images that the discriminator processes. Additionally, feature pyramids provide multi-scale feedback from multiple discriminators and random projections better utilize deeper layers of the pretrained network.

https://www.casualganpapers.com/data-efficient-fast-gan-training-small-datasets/ProjectedGAN-explained.html

A side step into generating project posters using pre-trained models and services

Text generation (project description and project title generation)

Generating images will be put on hold for now, because this will take significant training time. So now I’m going to focus on text generation, for example using RNNs.

https://www.tensorflow.org/text/tutorials/text_generation

This uses a character based approach, which results in grammatical errors and non-sensical sentences.

https://www.kaggle.com/code/shivamb/beginners-guide-to-text-generation-using-lstms/notebook

Maybe we can use the descriptions of projects as a starting point with GPT-3 or a pre-trained model.

Generation of a project description using a prompt

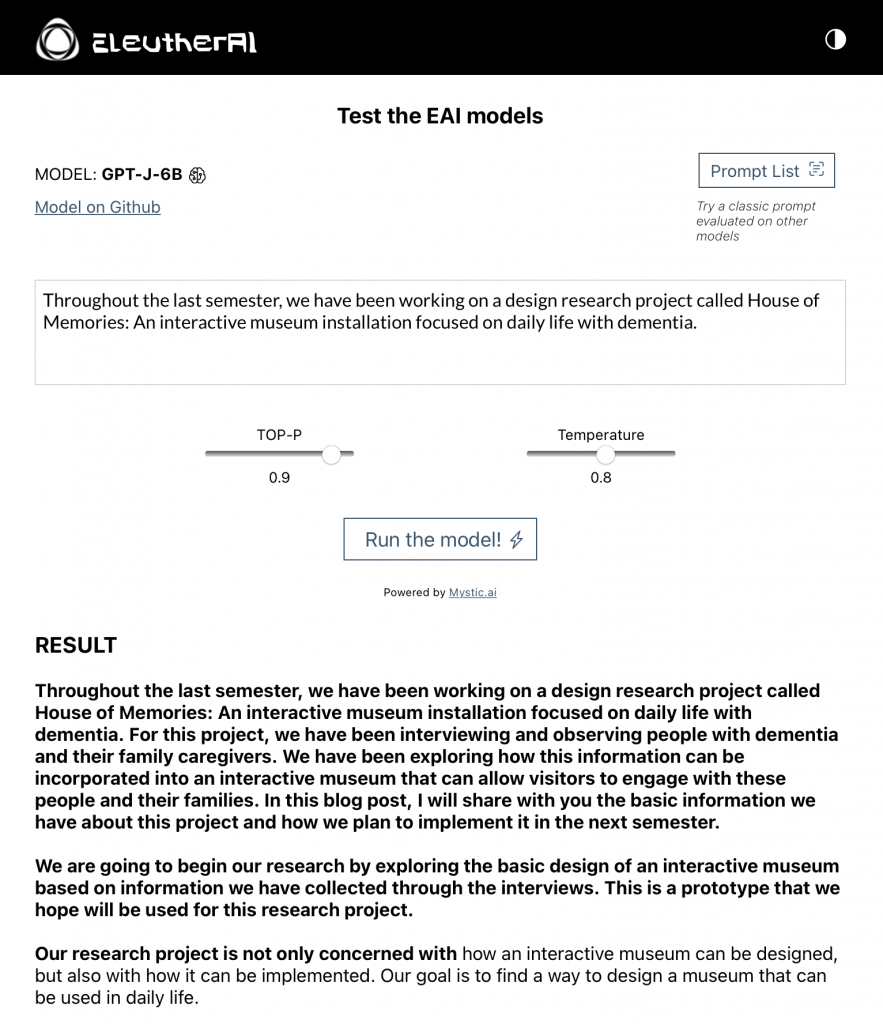

Here we use a prompt to generate a project description. Using GPT-J, an open, less advanced version of GPT-3. It can be used here.

While it produces reasonably sensible results, it does not consistently produce results that are usable. This will result in an interplay between the end user and the AI model, where the end user has to manually pick which result is the best and bin parts of the generated text that are unwanted.

The following text was generated with the tool, with the bold text being the input text (prompt).

The design research project UrbanAR, an Augmented Reality Design Tool for Urban Design and Planning. The aim of the project is to present a tool that can be used by planners, architects, urban designers and anyone involved in urban planning, to design, plan and implement the urban environment. The tool will be capable of offering a comprehensive view of urban design options, displaying them in an immersive and interactive 3D environment.

https://6b.eleuther.ai

Further steps in the project

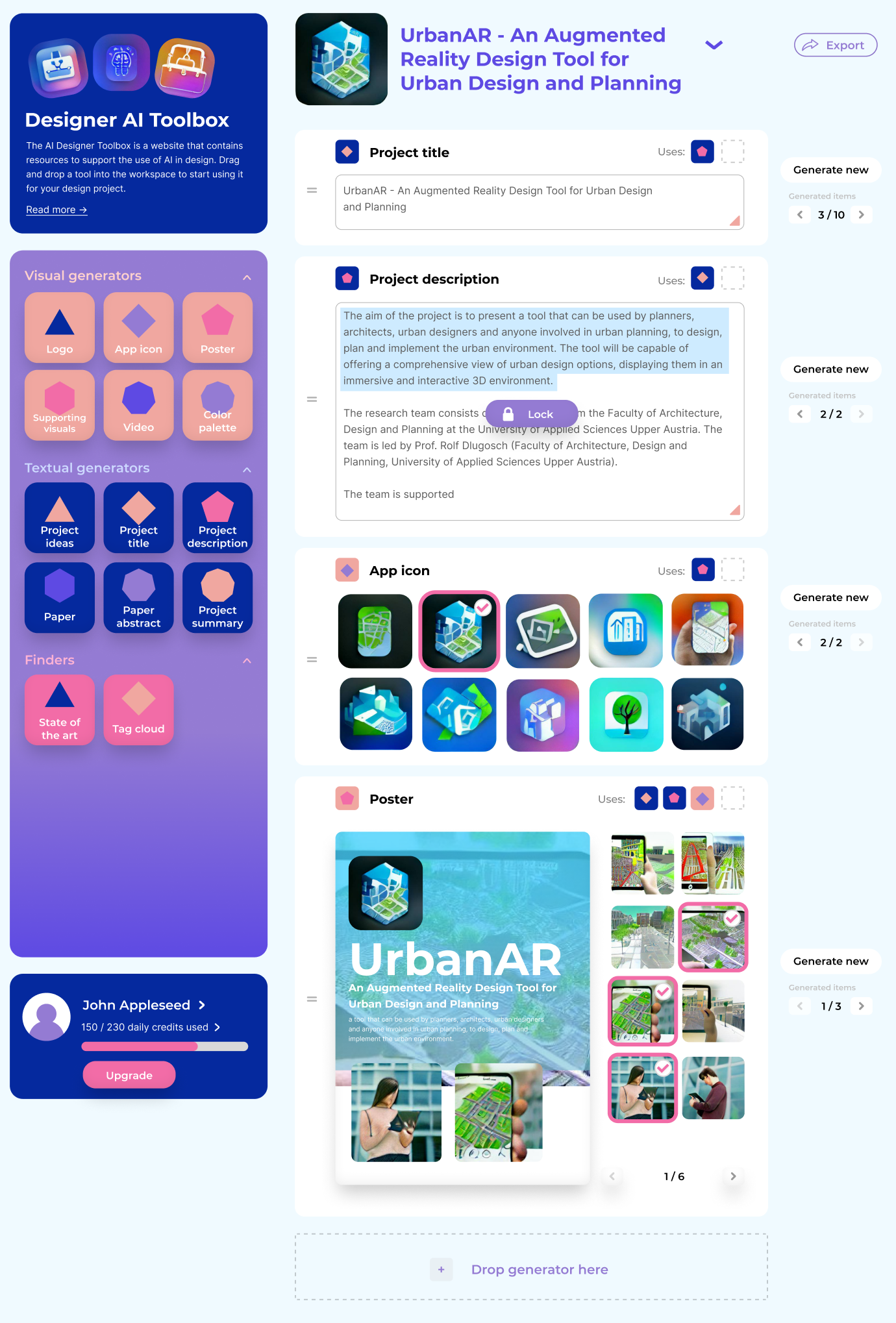

The poster, icons and text in the prototype are generated using the following two tools: GPT-J and Dall-E mini.

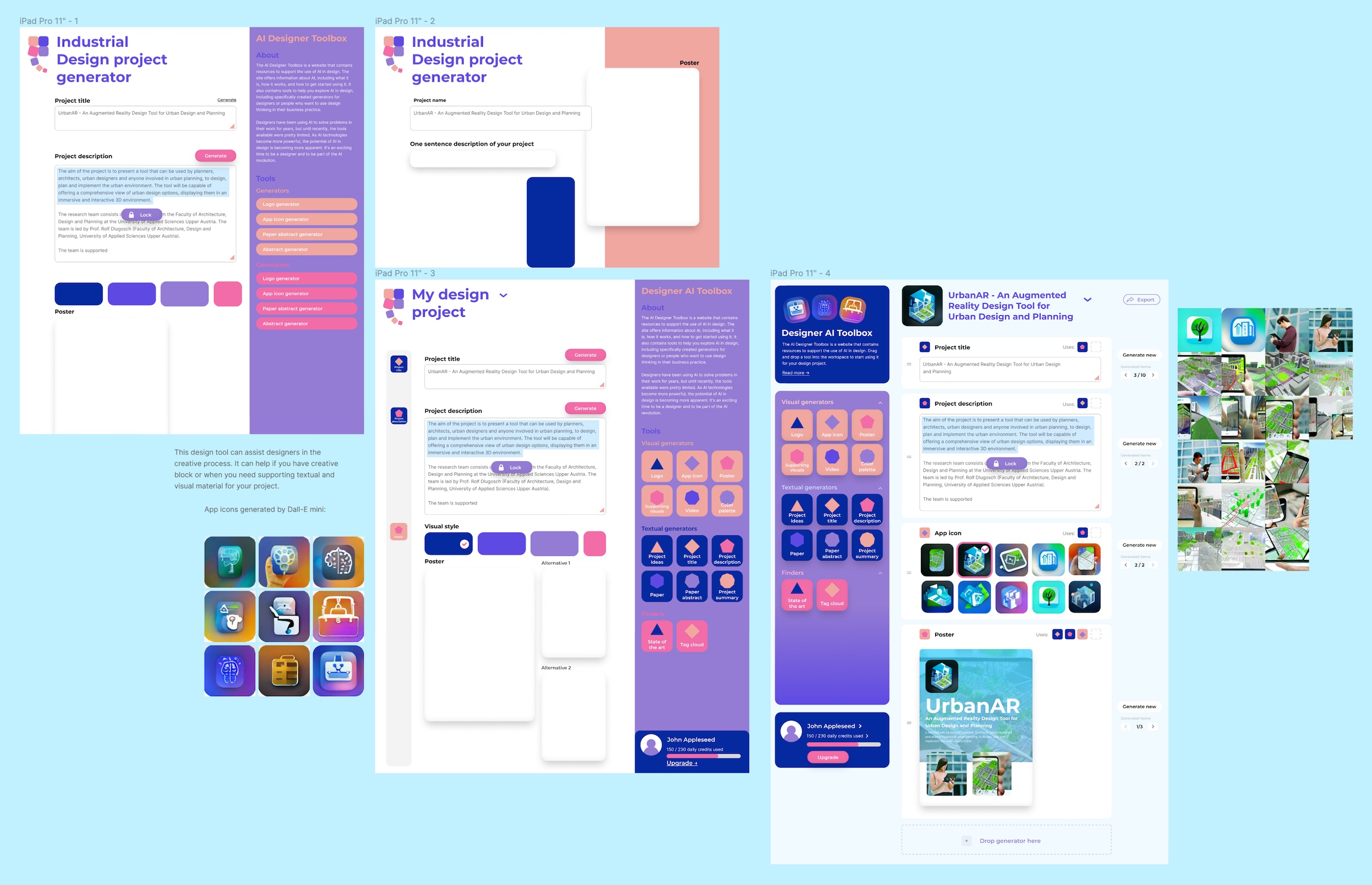

Using a Wizard-of-oz approach and Figma, this can be tested with users.

Linking to a broader context of the interplay between designers and AI

The current state of the art in machine learning models for the generation of text and images is far more advanced than what I have been able to integrate in this project, as can be seen in the following videos, but these are not yet available to the public. However, these will become gradually more accessible to everyone, allowing designers to integrate these tools in the creative process. How this might look is explored in the initial prototype that is shown after this section.

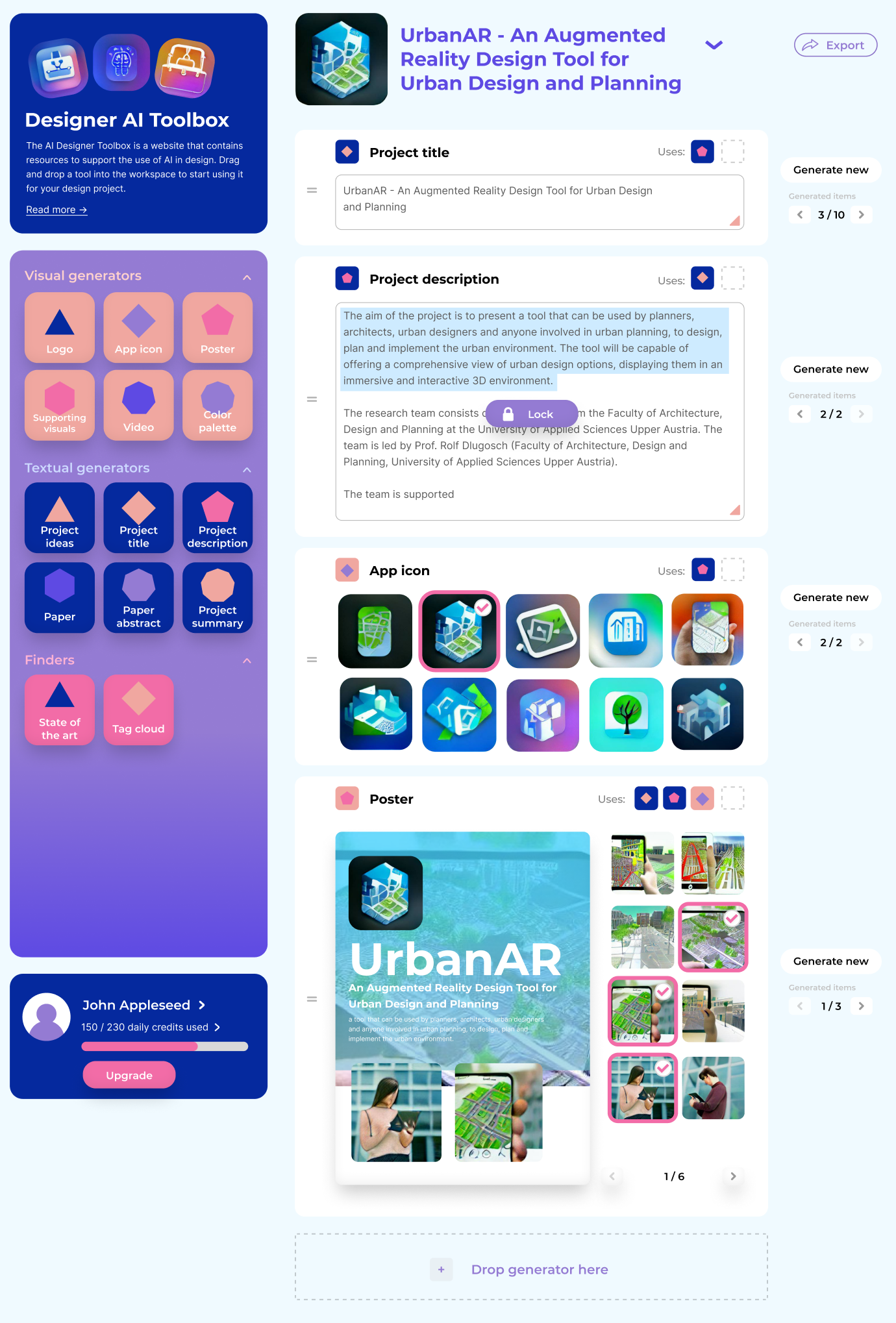

Final design

The final design showcases how a future toolbox that makes AI usable for a designer might look like. It incorporates the idea of having modular blocks of data that can all be generated using pre-trained AI models such as GPT-3 and Dall-E 2. These blocks can use as their prompt the data from the other blocks — e.g. the project title can be generated from the project description and vice versa. The poster can be generated

Evaluation

Todo